10 Million Steps

Articles on leadership and mental models for understanding the world. All articles are written by Wojciech Gryc.

User Experience and AI: the GPT-3 'click'

by Wojciech Gryc

A great deal has been written about GPT-3 and its potential impact and hype on AI, machine learning, and data science. This post aims to look at the user experience around GPT-3 instead. Specifically, why do some people see GPT-3 as a magical innovation? What does this tell us about AI-driven products we don’t fully understand?

I’ve been exploring GPT-3 for the past few weeks and have been incredibly impressed with its ability to take my natural language prompts and generate helpful responses. More importantly, I’ve organized a few demo events and discussions about GPT-3 and have seen people play and interact with it.

The GPT-3 Click

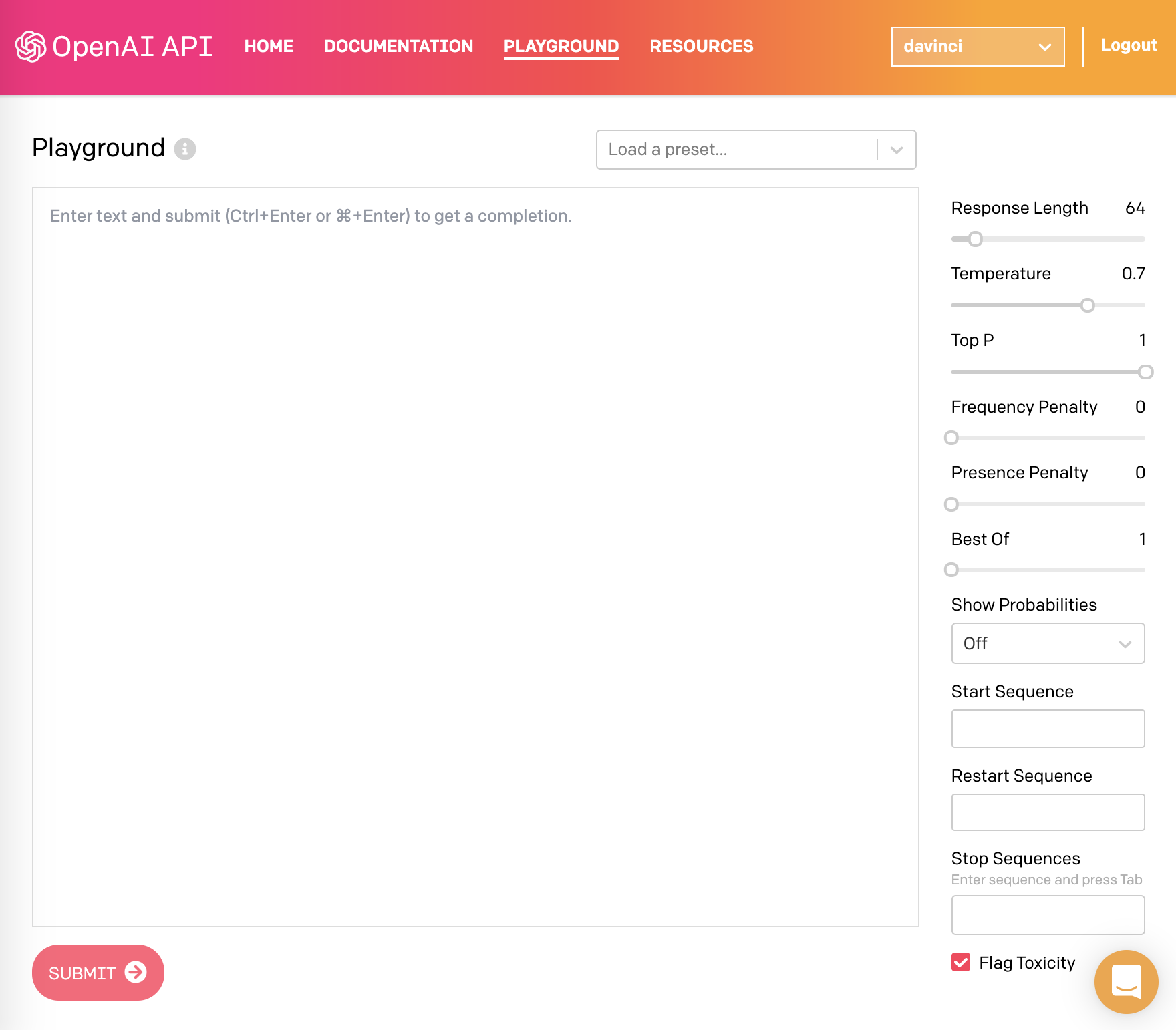

When a user first logs into the GPT-3 Playground (i.e., demo interface), they are met with an empty text box and several modeling options. You’re expected to write, to chat, or input some text–what you say or do is up to you. It can be overwhelming in its simplicity.

The OpenAI GPT-3 Playground

There are sample text prompts to show you how to generate a Q&A session, or an English/French translation, or a story… What is critical, however, is that the entire interface is a text box–as the user, you simply provide text (i.e., the prompt) and ask GPT-3 to do the rest.

A new user invariably does the following:

- They generate a chat prompt. They ask GPT-3 some existential questions. They giggle or smile at the responses; or maybe they frown if the responses don’t make sense to them.

- They then try something more flexible… They ask GPT-3 to answer questions under different personas; “You’re now a grumpy old professor”; “You’re Donald Trump.”; “You’re a Zen Buddhist and you fear climate change. What do you think of the world?”

- Then they try something more complex. Maybe they try to get it to generate Python code. Or a Mandarin translation… Or a poem in the style of T.S. Eliot.

…Then they realize that this entire time, they’ve been programming a model–without code; without strict syntax; solely with verbal direction and ideas that are interpreted and acted upon by a software machine somewhere in the cloud.

… That’s when their eyes light up…

GPT-3 isn’t intelligent; can’t reason; and struggles adding 5-digit numbers… But it takes loose commands written with unclear text and does its best, and this lights a fire in the hearts of its users.

This click is difficult to generate and rarely happens without exploring the API. It’s hard to do this when you read a few examples in a blog post; it’s about interacting with GPT-3’s interface in a way that enables you to choose which topics matter to you and see how GPT-3 responds to you and your ideas.

Lessons for AI-driven Products

AI-driven products differ from their non-AI-driven counterparts because the underlying AI can be unpredictable. The AI is sometimes logical, sometimes comically wrong, and always different from the fully predictable software you typically use, where instructions are executed literally and without deviation.

An AI software agent is different because it interpolates, generates, and predicts based on the data it’s been given. This leads to uncertainty. It hopefully reduces errors or has a lower time commitment than what we might expect a human equivalent to require, but it means users will experience some unpredictability and at some point, actual errors. Most painfully to a product manager, it means the experiences might be inconsistent or difficult to debug or understand.

Let’s illustrate with a product recommendation example… I don’t know how many hours of human effort would be required to generate product recommendations on Amazon, but I imagine there aren’t enough humans on this planet to do so… Let alone to do it in real-time as you browse books, video games, and cooking utensils. And as a result the recommendations sometimes produce joy, but sometimes are hilariously wrong.

What does this mean for product design? Below are a few lessons for AI-driven product managers based on the GPT-3 click experience.

AI products require a form of play. You need to interact with them, learn their limitations, and also be surprised by them to truly understand what’s possible. The element of surprise–the GPT-3 click above–is what drives trust in the models.

AI products require forgiveness. They will make mistakes and you need to understand this. Younger children will say brash and rude comments at times; the AI might make silly mistakes as well. Training the user to forgive while playing is critical.

AI products work best in gray areas, where one needs to be scalable, base decisions around data, but also move too quickly for a human to contribute or oversee the results. The product recommender example above is apt; so is the requirement to predict text based on a persona, as GPT-3 does. Gray areas allow for unpredictable mistakes; otherwise use a human or write code that never deviates from its instructions.

Play and forgiveness, speed and scalability: these are critical to understand where AI-driven models, features, and products benefit users, and also how they may fall short. These are by no means universal principles, or comprehensive… But AI-driven products will only get more complex and pervasive. We need to think build the user experience and encourage users to trust them.

This is where the GPT-3 click comes about. You chat with the it for 5 minutes, 10 minutes, or more, and eventually you learn to trust its intuitions and linguistic responses… Is it alive? No. Is it actually intelligent? No. Does it have something new and special to offer the world? Absolutely yes.